Recently we upgraded customers to ACI 5.2(2f) and plugin were failing including installation of new plugin as the UCSM external switch for UCS automation.

Anyway here is the fix:

Symptom: – App installation/enable/disable takes a long time and does not complete. acidiag scheduler images does not list the app container images after a long time.

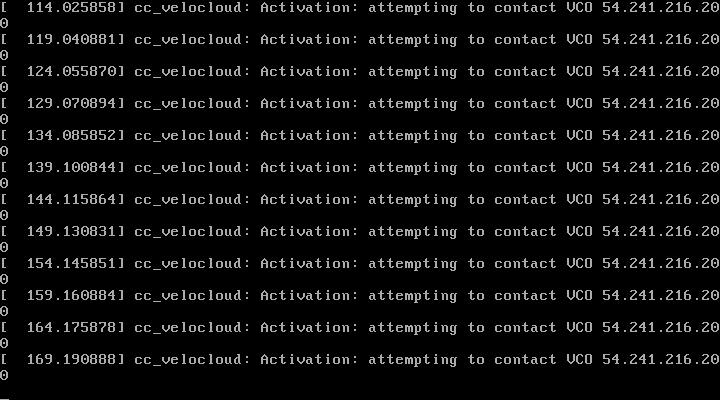

Conditions: any 5.2.1.x or 5.2.2.x or 5.2.3.x or 5.2.4.x images can run into this issue. This can affect any scale-out app, including NIR and ExternalSwitch. This usually happens when there is unstable app container that keeps restarting. acidiag scheduler containers shows some containers constantly restarting.(The command is available from 5.2.3.x release)

Workaround: Workaround is the following: (Run on APIC 1)

1) acidiag scheduler disable

2) acidiag scheduler cleanup force

3) acidiag scheduler enable

Disable any apps especially ones that can be identified as constantly restarting when you detect such an issue and then proceed with these steps. If this is not possible, simply perform the steps after downgrade completes.

Workaround is to be performed ONLY ONCE and the apic cluster is in a healthy state according to acidiag avread. All apics should have the same version and health=255. After workaround, wait for 30-45 mins to check the status on the app.

dev1-ifc1# acidiag scheduler cleanup force Stopped all apps

[True] APIC-01 – Disabled scheduler [True] APIC-02 – Disabled scheduler

[True] APIC-03 – Disabled scheduler [True] APIC-01 – Cleaned up scheduler (forced)

[True] APIC-02 – Cleaned up scheduler (forced)

[True] APIC-03 – Cleaned up scheduler (forced)

dev1-ifc1# acidiag scheduler enable Enabling scheduler:

[True] APIC-01 – Enabled scheduler

[True] APIC-02 – Enabled scheduler

[True] APIC-03 – Enabled scheduler